AI, Administration Idiocy

Our government, when faced with something new and hard to understand, knows exactly how to respond; make a new law to try and control it. That’s why most of us were unsurprised when the president announced the latest attempt at government control of new technology. The recently announced, bombastically titled order is called the “Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence”.

There are so many things wrong with this order that we are required to break our Substack into three parts, because this amount of idiocy could never fit into one essay. This is Part One.

This first part is about the idea that a new form of technology cannot be allowed to develop without bureaucratic regulation. The order’s opening paragraphs say, “My Administration places the highest urgency on governing the development and use of AI safely and responsibly”. So now this very rapidly growing technology must be governed by administrators who have little idea what AI even is (or will become).

Artificial Intelligence has really been around a long time. The loved and hated Autocorrect in your word processor is a form of AI, using a database of dictionaries and grammar to tell you that you are not golfing on a corpse. You may have noticed that many social media apps on your phone, as well as your text messaging, now suggests words to continue a sentence, or your email program will offer to respond to a question automatically.

Usually, when we say AI now, we are referring to systems that use massive databases - Large Language Models which use massive amount of data to performs functions with great efficiency and can interact with us as if they have personality. Many of us have used Dall-E graphic software, or ChatGPT to get data and written information that is very useful (when accurate).

AI will likely improve our lives in many ways.

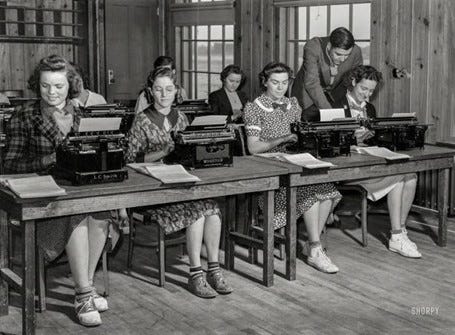

As with any technology that improves efficiency, some jobs will be reduced or disappear entirely, as AI relieves the work burdens in many areas. We don’t need elevator operators any longer, and modern offices do not look like this:

Come to think of it, our offices now look like this:

For most of us, AI will enable us to be more efficient in our jobs, reduce workload for unpleasant tasks, save time and improve the speed and creativity of our work. For example, people who use AI chat instead of web search apps are finding that they can get much better data faster than scrolling through pages of search results. Like everything online, results may not always be accurate, but it was always our responsibility to verify information.

What people find frightening about AI is the speed of the development and acceptance of the technology. The other thing that raises concerns is the increasing development of realistic, but false images, recordings, and videos. The term ‘Deep Fake’ simply means that the quality is good enough to fool people into believing what they are hearing or seeing.

These misleading images and videos used to be easy to detect, but increasingly, are becoming more sophisticated. Tools for creating fake images and videos are now available all over the web, including this tutorial in how to change the face of someone in a video (click to watch):

You can see how this is a disturbing trend, and one that should make us all skeptical about the material we see online these days.

But the assertions in this executive order are, as expected, foolish and uninformed. In the first section, the order wistfully hopes that “Artificial Intelligence must be safe and secure”. The creators of the video linked above must not have gotten that memo.

So how will the government control this technology? First, by doing the impossible:

“…my Administration will help develop effective labeling and content provenance mechanisms, so that Americans are able to determine when content is generated using AI and when it is not.”

(note that we are protecting only Americans here). This statement means that they hope to require the use of a watermark, either visible or embedded in the data, to detect if media has been tampered with. This is nothing new. Microsoft, Google and others have developed watermarking systems to detect fake results. But in many cases, knowing if something has a digital watermark is hard to detect, and systems have so far been easy to break. And since we can't rely on other countries or bad actors to follow our president’s executive orders, fake information will remain widespread.

Of course, the order spends a lot of time talking about job protection and making sure that AI somehow preserves “equity.” When promoting the impossible, governments should always go big. The order even contains Minority Report references: “The term “crime forecasting” means the use of analytical techniques to attempt to predict future crimes or crime-related information.” Philip K Dick has at last had his paranoia vindicated. The document also makes reference to our favorite law to hate, The Patriot Act.

The second part of this Impossible Dream involves government’s favorite tool; punishment. In this case, the order demands one of the most sweeping actions ever put into an Executive Order.

The order goes on to describe pretty much any company using software more recent than Windows95. The government agencies and administrators involved include the Department of Defense, Homeland Security, Secretary of Energy, Director of Science, Pandemic Preparedness, and perhaps the Minister of Silly Walks.

The companies in question must, according to this order, provide reports to the federal government on nearly anything they develop in the AI realm. It covers sales or communication with foreign entities, and tests developed for ‘synthetic content’ The document goes on to list all sorts of parameters that need to be reported on. At some point, penalties for non-compliance will be developed.

The order requires the development of “Red Teams”. Red-Teaming is when you hire hackers to attack your software to discover problems, bias, and security holes. Most big software companies do this already, but now they must generate reports on an ongoing basis.

In other words, companies must now staff up for reporting on software development (ah, job creation, excellent!) that may or may not be regulated as pertaining to AI. Since almost all software carries an AI potential, this will be a difficult task to complete. The costs of generating such reports will likely make Sarbanes-Oxley seem like a bargain.

All this is going to be expensive, and likely slow down the development of AI. Technology always evolves much faster than governments can apply regulations. Congress and bureaucrats will never be able to prevent us from ever seeing false information. (The false information, that is, that they don't want us to see.) Software and social media organizations will develop their own systems for consumers to use. They will not do this because of government threats, but because it is in their own self interest to do so. Applying layers of regulation will only slow the development of the tools consumers will want to use.

The best systems will win out in the marketplace. These tools will not prevent people from posting misinformation, including altered sounds and images that appear to be real. Instead, we will all develop an important sixth sense – that of skepticism of what we see and hear. No amount of heavy-handed administration idiocy will protect us better than our own common sense.